How to implement and configure RAID 5 on RHEL / CentOS ?

How to implement and configure RAID 5 on RHEL / CentOS ?

What is RAID 5 : RAID-5 eliminates the use of a dedicated parity drive and stripes parity information across each disk in the array.

Why we use RAID 5 : RAID-5 has become extremely popular among Internet and e-commerce companies because it allows administrators to achieve a safe level of fault-tolerance without sacrificing the tremendous amount of disk space necessary in a RAID-1 configuration or suffering the bottleneck inherent in RAID-4. RAID-5 is especially useful in production environments where data is replicated across multiple servers, shifting the internal need for disk redundancy partially away from a single machine. RAID level 5 can replace a failed drive with a new drive without user intervention. This functionality, known as Hot-spares. Also supports Hot-Swap, Hotswap is the ability to removed a failed drive from a running system so that it can be replaced with a new working drive. This means drive replacement can occur without a reboot. Hot-swap is useful in two situations. First, you might not have enough space in your cases to support extra disks for the Hot-Spare feature. So when a disk failure occurs, you may want to immidiately replace the failed drive in order to bring the array out of degraded mode and begin reconstruction. Second, although you might have hot-spares in a system, it is useful to replace the failed disk with a new hot-spare in anticipation of future failures.

1. Here first we will check all the detected HDD's, as you can see in the below snap 1st three HDD's (/dev/sdb, sdc and sdd) we will use to make RAID 5 (/dev/md0) partition.

2. Now we will make a RAID partition on each three drives (sdb, sdc, sdd) one by one, here we go with "/dev/sdb" first,

#fdisk /dev/sdb

A. Note : Here in this tutorial we will use only highlighted options in the next snap,

B. Press "n" to create new partition, then select "p" for creating Primary partition, here we are making only 1 partition so choose "1", keep first & last cylinder defualt so press double "enter", If you check in below snap "p" will display recentely created partition (/dev/sdb1) with Partition ID "83".

C. As we have to make "RAID" partition we will change it's partition ID to "fd" from "83", you will get all partition ID lists by pressing "l",

D. Next press "t" & then enter "fd" & press enter, now after pressing "p" you can see that your partition ID has been changed to "fd" i.e. Linux raid autodetect.

E. Now, if you will check this setting what you made just now is just temporary, to make it permanent we need to update partition table, So here what "w" option will do for you, it'll update your partition table & exit from fdisk prompt.

3. Do same with other two HDD's i.e. /dev/sdc & /dev/sdd . After you complete the process it'll look like this as below,

4. Next we will implement RAID 5 and make a saftware RAID 5 device "/dev/md0"

#mdadm --create --verbose /dev/md0 --level=5 --raid-devices=3 /dev/sdb1 /dev/sdc1 /dev/sdd1

Note : To check /dev/md0 status,

#mdadm --detail /dev/md0

Note : As in above snap wait till Rebuild status reaches to 100%. To check it continuously without executing command again and again do as,

Here in next snap, if you check after it reaches to 100% it should show "Status - Clean" and below all the three disks should be in "active - Sync".

5. And thats it ... our RAID 5 partition "/dev/md0" is ready. Now you have two ways to access it, either you impliment LVM on it or directly access it by formating it and mounting it on a mount point. But keep in mind if you want LVM on Raid device then dont follow next steps and see my other article for "Implimenting LVM over RAID 5"

Here in this tutorial i am making ext3 filesystem and mounting it on a folder to access it.

#mkfs -t ext3 /dev/md0

6. Then to mount "/dev/md0" we will make a new folder "/Raid5_Data",

#mkdir /Raid5_Data

#mount /dev/md0 /Raid5_Data (Temp Mount)

7. To make it permanent edit "/etc/fstab" file and add below line,

/dev/md0 /Raid5_Data ext3 defaults 1 2

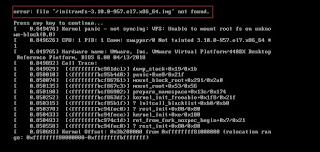

Note : Dont make any other modification, else if you reboot next time it will go to emergency mode.

To check if you edited it properly, execute "mount -a" if it shows any error make it correct.

And start accessing your "/Raid5_Data" for your use. I am including second method "LVM on RAID 5" in my another article.

What is RAID 5 : RAID-5 eliminates the use of a dedicated parity drive and stripes parity information across each disk in the array.

Why we use RAID 5 : RAID-5 has become extremely popular among Internet and e-commerce companies because it allows administrators to achieve a safe level of fault-tolerance without sacrificing the tremendous amount of disk space necessary in a RAID-1 configuration or suffering the bottleneck inherent in RAID-4. RAID-5 is especially useful in production environments where data is replicated across multiple servers, shifting the internal need for disk redundancy partially away from a single machine. RAID level 5 can replace a failed drive with a new drive without user intervention. This functionality, known as Hot-spares. Also supports Hot-Swap, Hotswap is the ability to removed a failed drive from a running system so that it can be replaced with a new working drive. This means drive replacement can occur without a reboot. Hot-swap is useful in two situations. First, you might not have enough space in your cases to support extra disks for the Hot-Spare feature. So when a disk failure occurs, you may want to immidiately replace the failed drive in order to bring the array out of degraded mode and begin reconstruction. Second, although you might have hot-spares in a system, it is useful to replace the failed disk with a new hot-spare in anticipation of future failures.

1. Here first we will check all the detected HDD's, as you can see in the below snap 1st three HDD's (/dev/sdb, sdc and sdd) we will use to make RAID 5 (/dev/md0) partition.

2. Now we will make a RAID partition on each three drives (sdb, sdc, sdd) one by one, here we go with "/dev/sdb" first,

#fdisk /dev/sdb

A. Note : Here in this tutorial we will use only highlighted options in the next snap,

B. Press "n" to create new partition, then select "p" for creating Primary partition, here we are making only 1 partition so choose "1", keep first & last cylinder defualt so press double "enter", If you check in below snap "p" will display recentely created partition (/dev/sdb1) with Partition ID "83".

C. As we have to make "RAID" partition we will change it's partition ID to "fd" from "83", you will get all partition ID lists by pressing "l",

D. Next press "t" & then enter "fd" & press enter, now after pressing "p" you can see that your partition ID has been changed to "fd" i.e. Linux raid autodetect.

E. Now, if you will check this setting what you made just now is just temporary, to make it permanent we need to update partition table, So here what "w" option will do for you, it'll update your partition table & exit from fdisk prompt.

3. Do same with other two HDD's i.e. /dev/sdc & /dev/sdd . After you complete the process it'll look like this as below,

4. Next we will implement RAID 5 and make a saftware RAID 5 device "/dev/md0"

#mdadm --create --verbose /dev/md0 --level=5 --raid-devices=3 /dev/sdb1 /dev/sdc1 /dev/sdd1

Note : To check /dev/md0 status,

#mdadm --detail /dev/md0

Note : As in above snap wait till Rebuild status reaches to 100%. To check it continuously without executing command again and again do as,

Here in next snap, if you check after it reaches to 100% it should show "Status - Clean" and below all the three disks should be in "active - Sync".

5. And thats it ... our RAID 5 partition "/dev/md0" is ready. Now you have two ways to access it, either you impliment LVM on it or directly access it by formating it and mounting it on a mount point. But keep in mind if you want LVM on Raid device then dont follow next steps and see my other article for "Implimenting LVM over RAID 5"

Here in this tutorial i am making ext3 filesystem and mounting it on a folder to access it.

#mkfs -t ext3 /dev/md0

6. Then to mount "/dev/md0" we will make a new folder "/Raid5_Data",

#mkdir /Raid5_Data

#mount /dev/md0 /Raid5_Data (Temp Mount)

7. To make it permanent edit "/etc/fstab" file and add below line,

/dev/md0 /Raid5_Data ext3 defaults 1 2

Note : Dont make any other modification, else if you reboot next time it will go to emergency mode.

To check if you edited it properly, execute "mount -a" if it shows any error make it correct.

And start accessing your "/Raid5_Data" for your use. I am including second method "LVM on RAID 5" in my another article.

Hi ashish, thanks for the data u have provided..

ReplyDeleteI am stuck at a point. Can u help me?

I have finished configuring the RAID as per the above steps. I wanted to check if its working fine. So i removed one of the hard disk and still found the data existing. But when i connect that hard disk back, the data should go for an automatic resync right. thats not happening. I can still see Raid running on only 2 hard disk. what should i do now?

Hi Nidhi,

DeleteHave you followed the same steps for disk fail-over as mentioned in " http://vlinux-freak.blogspot.in/2011/01/handling-raid-5-failover-when-1-disk.html ".

For detaching a faulty HDD or volume you need to give "mdadm /dev/md0 -f " .

Please follow the exact procedure as given in the above blog .

Thanks.

Thank u so much. I had missed adding the disk using -a command. Raid is working fine now. :)

DeleteHey, thanks for this post. I've become so lazy that I hardly ever do anything without a tutorial these days:)

ReplyDeleteOcaefidioyu Ronald Infinite https://wakelet.com/wake/OgUPAKUOAgBTNja1Za4JT

ReplyDeletefrilunerre